The trusted AI analyst for teams that move fast.

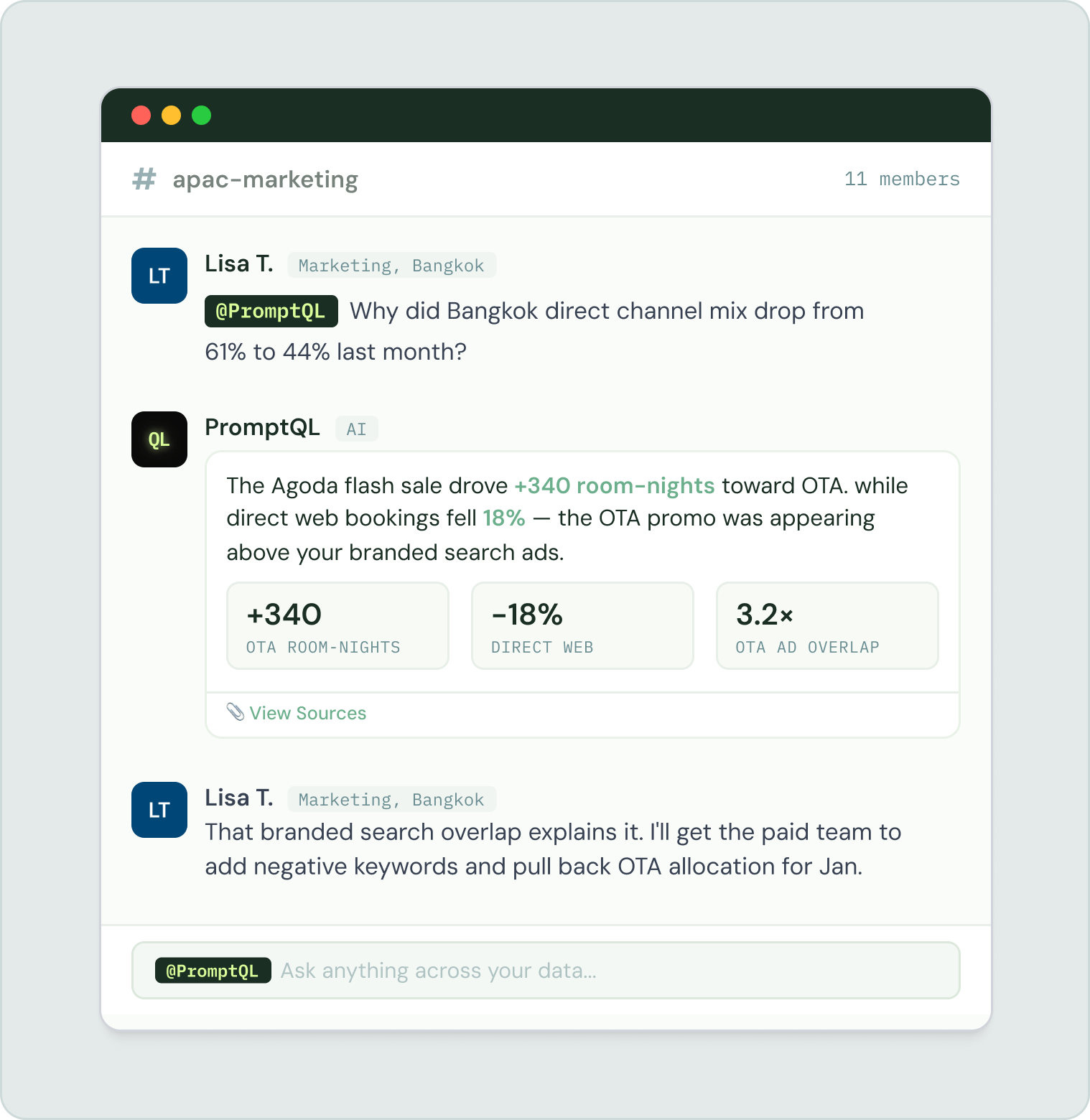

Embed PromptQL wherever frequent high-impact data questions demand fast, trusted answers.

Trusted at scale by

Trusted at scale by

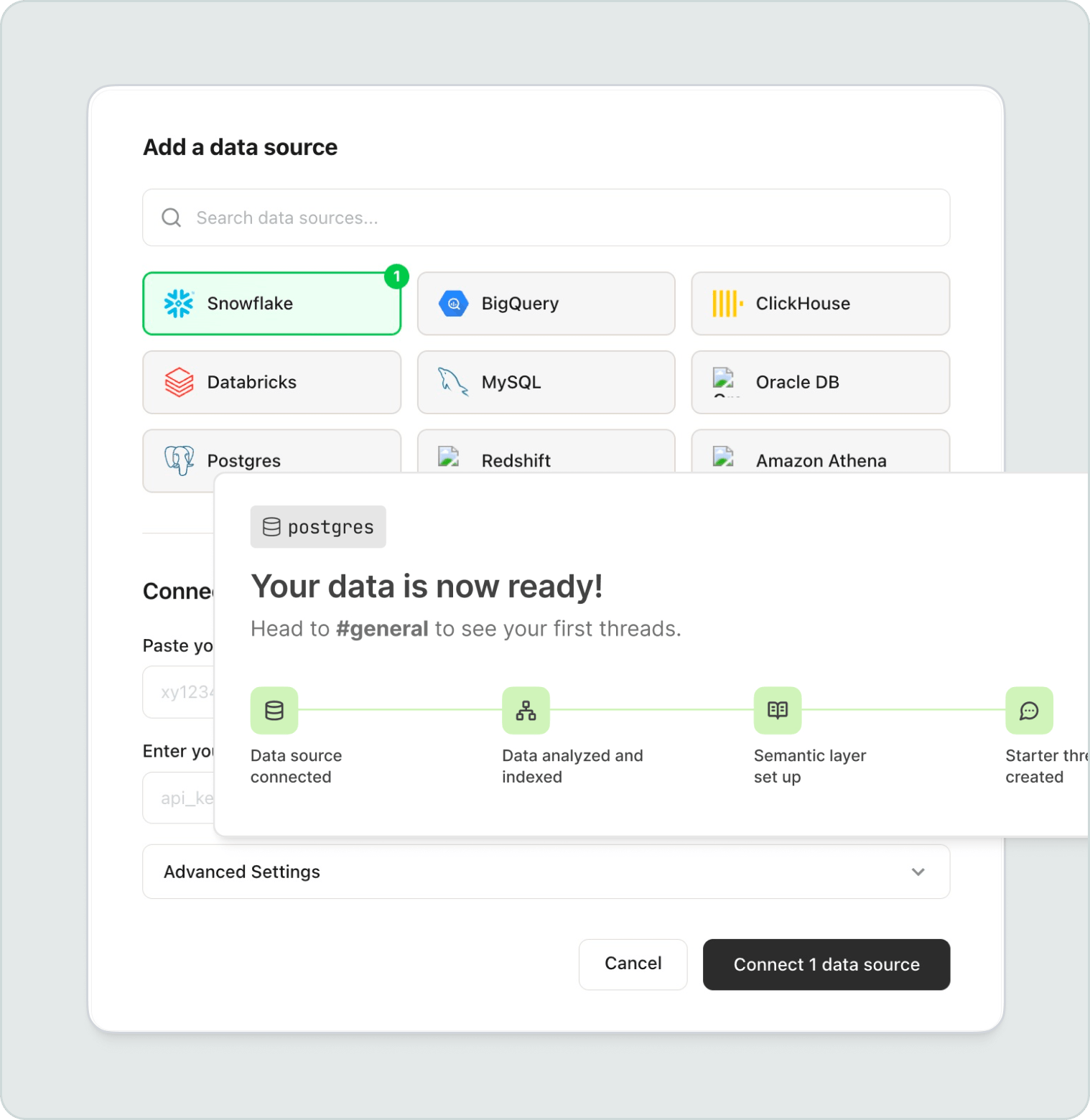

Connect any data, any context. No prep needed.

Keep data where it is, as it is

Connect your warehouse, databases, SaaS apps, and APIs as they exist today. PromptQL introspects the schemas to build a unified data graph, without moving or reshaping your data.

Go from data to questions in minutes.

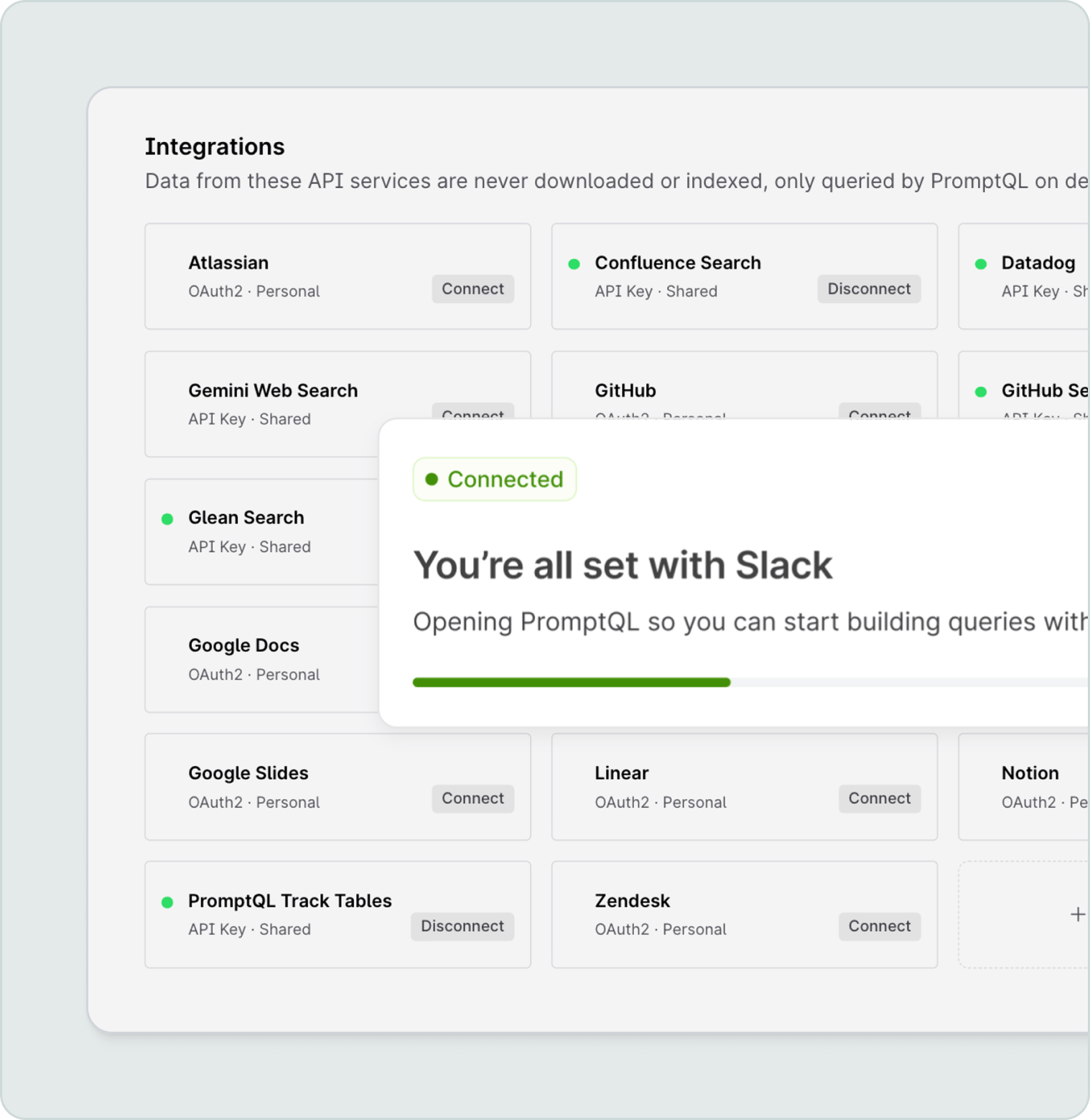

No upfront context engineering

Seed business context from Slack, Google Drive, GitHub, wherever knowledge already lives. Or start from scratch. No semantic layer required on day one.

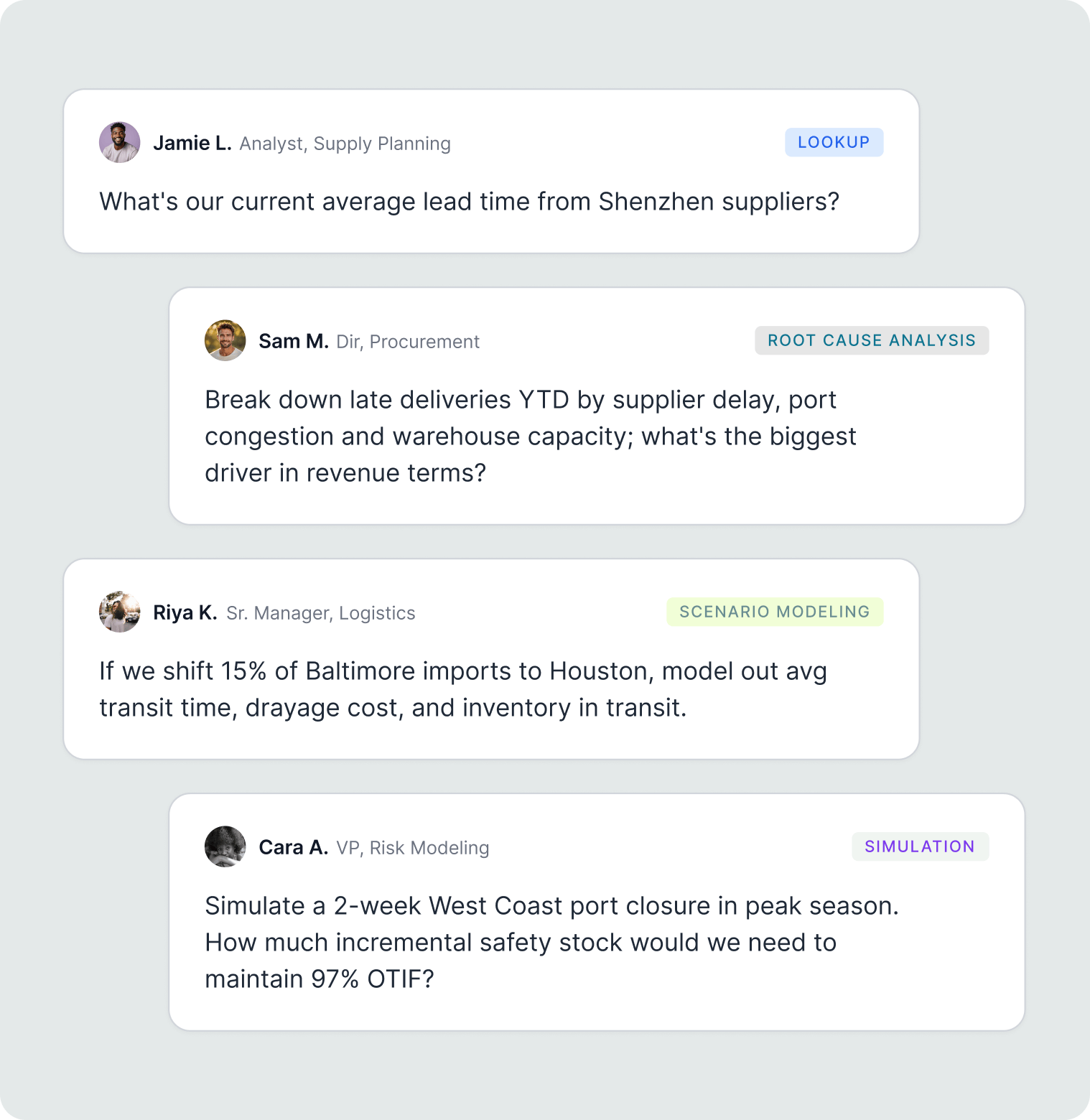

One stop for all your data questions

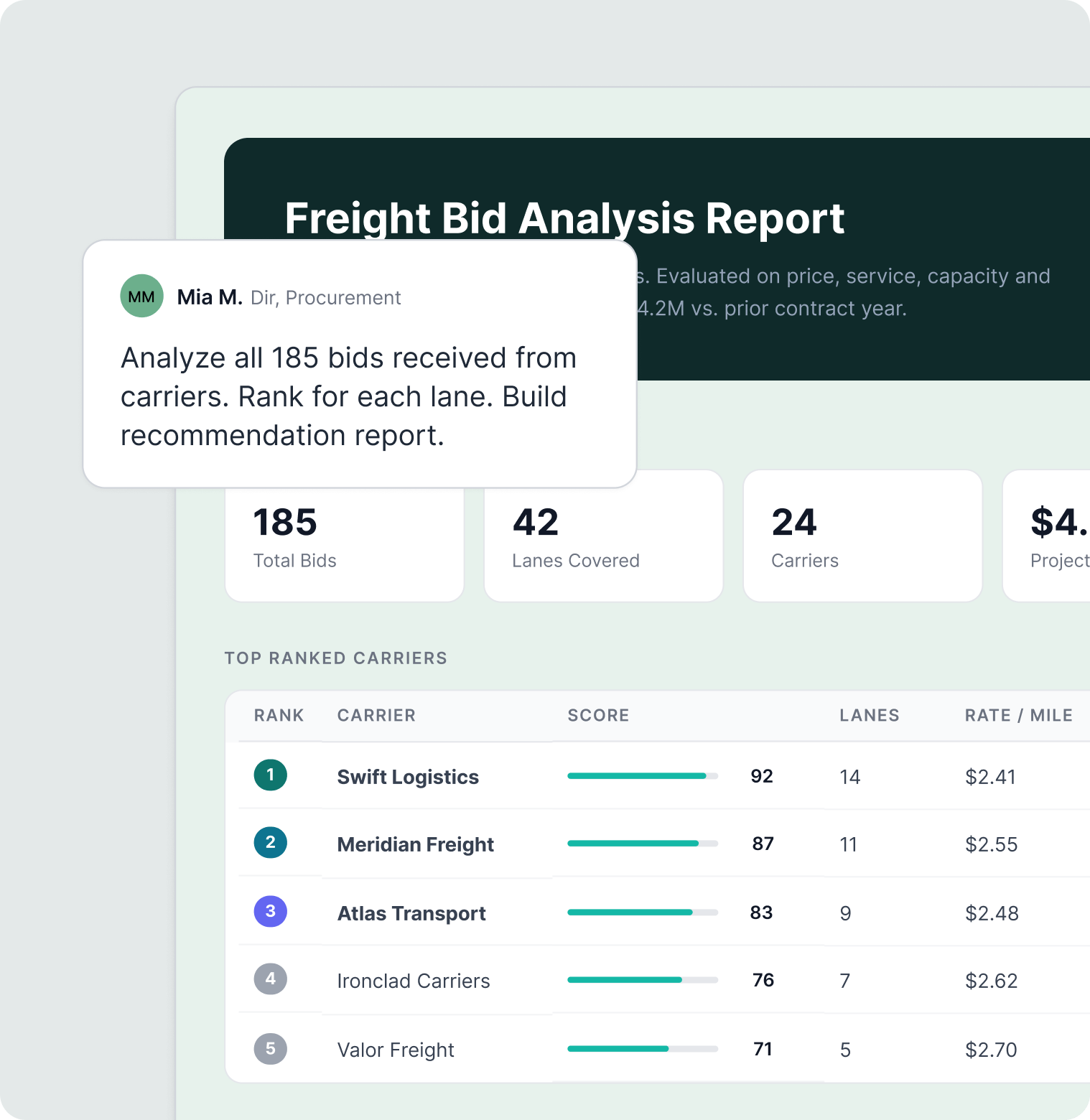

Simple to complex

From quick descriptive question to multi-source, multi-hop deep investigations. Every question gets a well-reasoned answer, you can trust and act on.

Dashboards & reports

Generate board-ready dashboards and reports, automatically aligned with your brand style and audience.

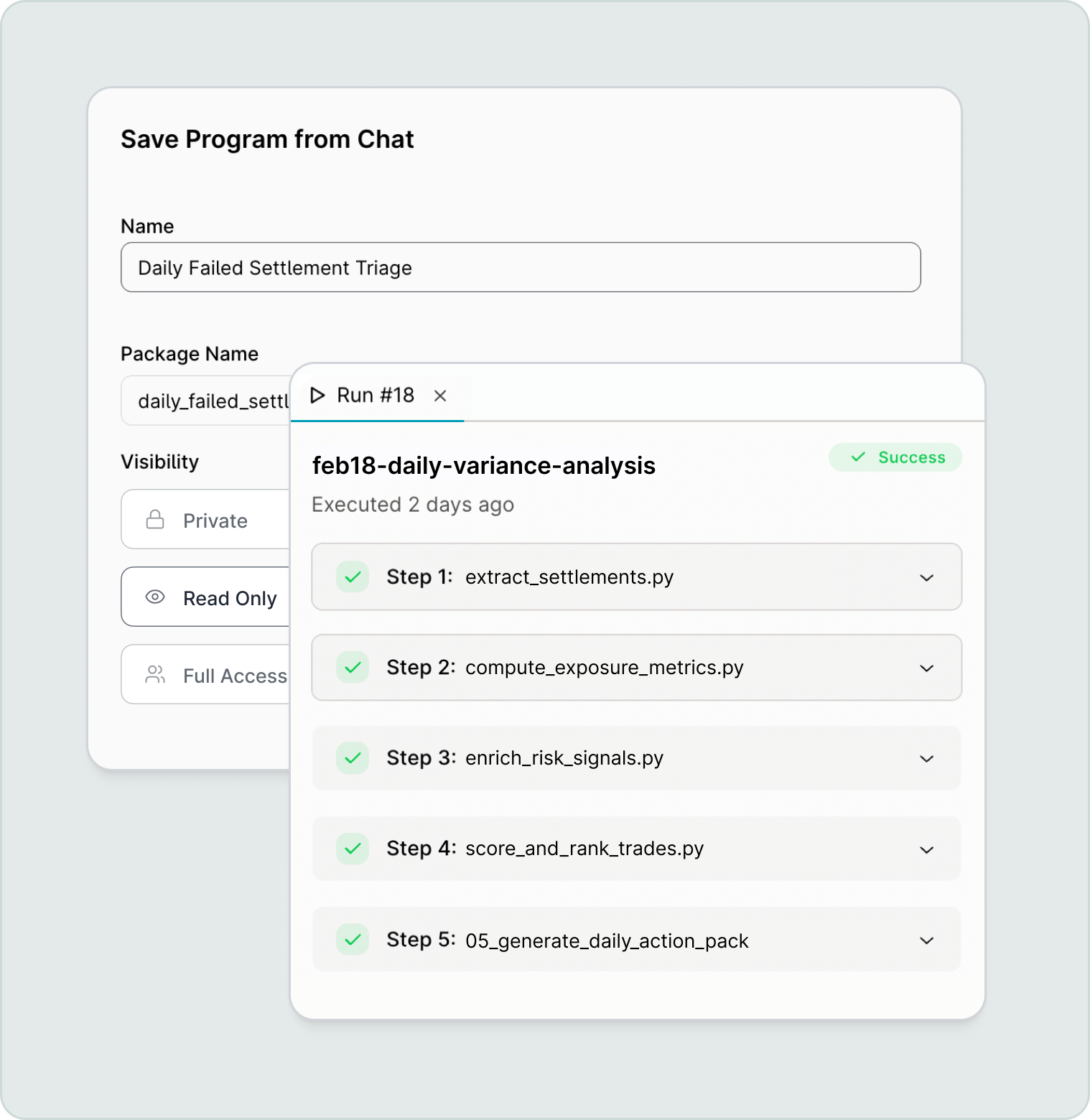

Automate the repetitive

Turn recurring workflows into reusable artifacts for the team. Ship analysis once, use it forever.

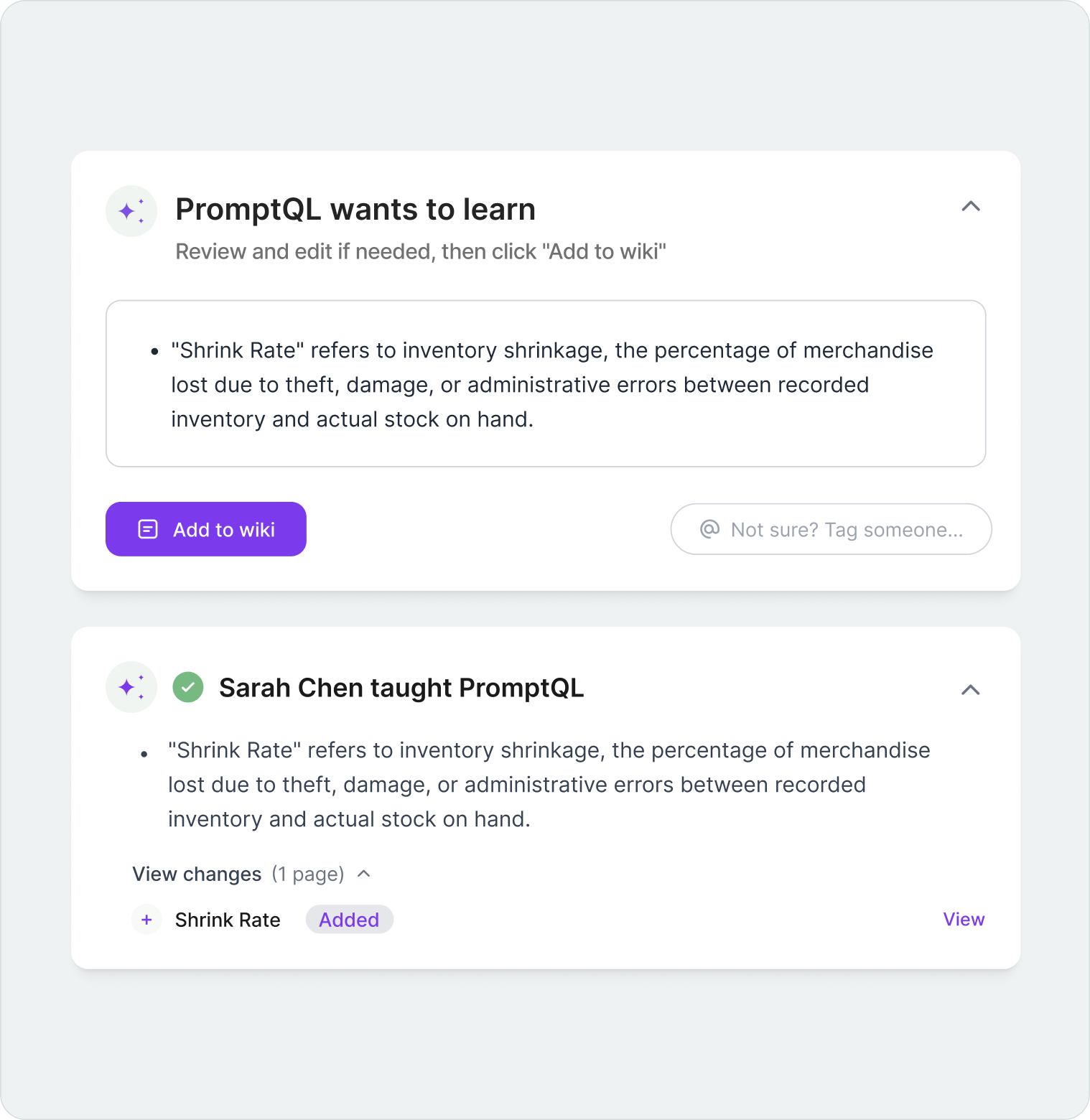

Semantic layer Operational wiki → AI accuracy

Traditional semantic layers weren't built for AI. They're rigid, centrally managed, and detached from day-to-day business.

PromptQL captures business context the way modern teams work: A dynamic, Wikipedia-style context graph that’s expressive, open, and collaboratively maintained.

![[object Object]](https://res.cloudinary.com/dh8fp23nd/image/upload/v1772022398/promptql-website/product/wiki_es50uh.png)

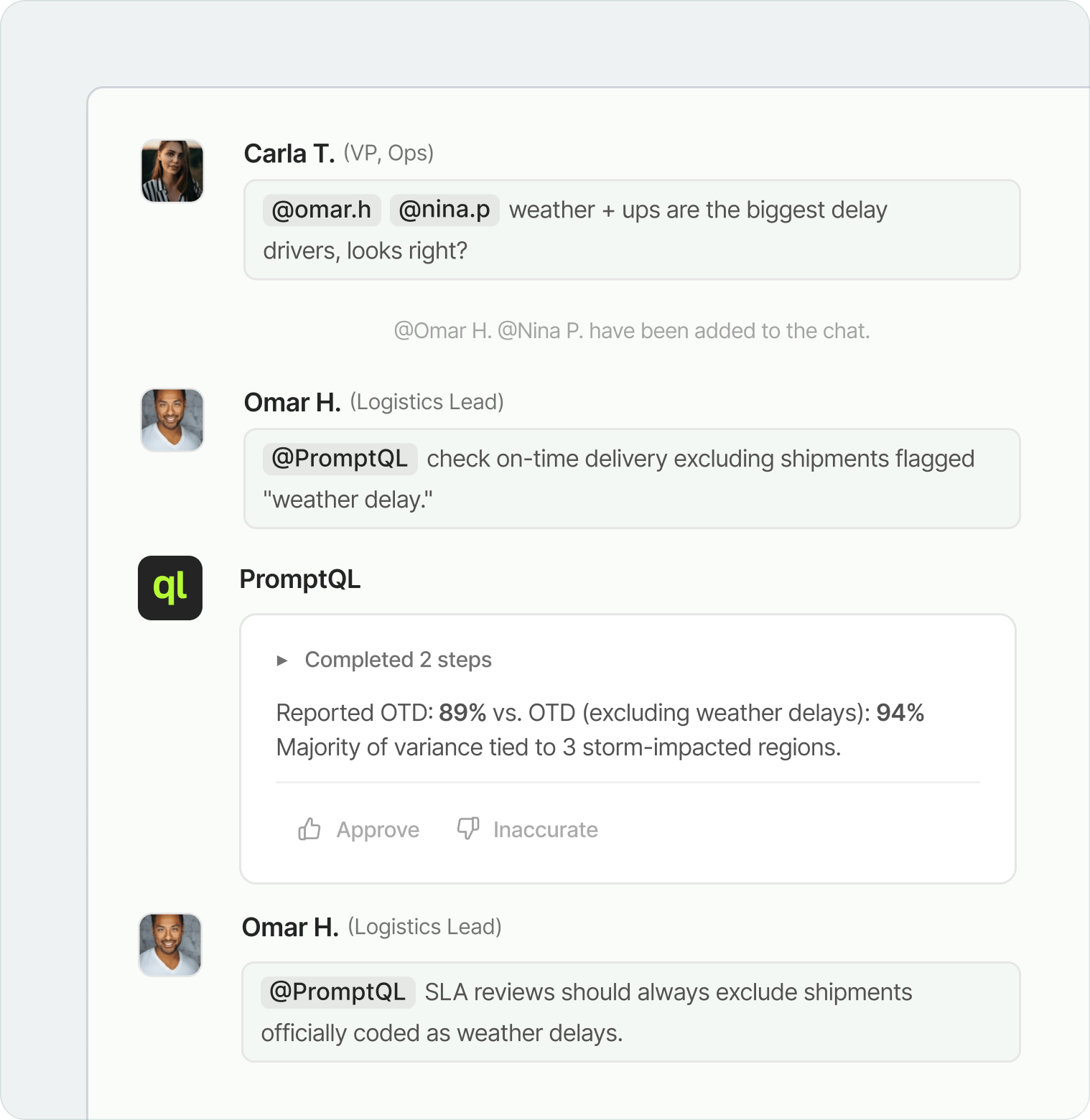

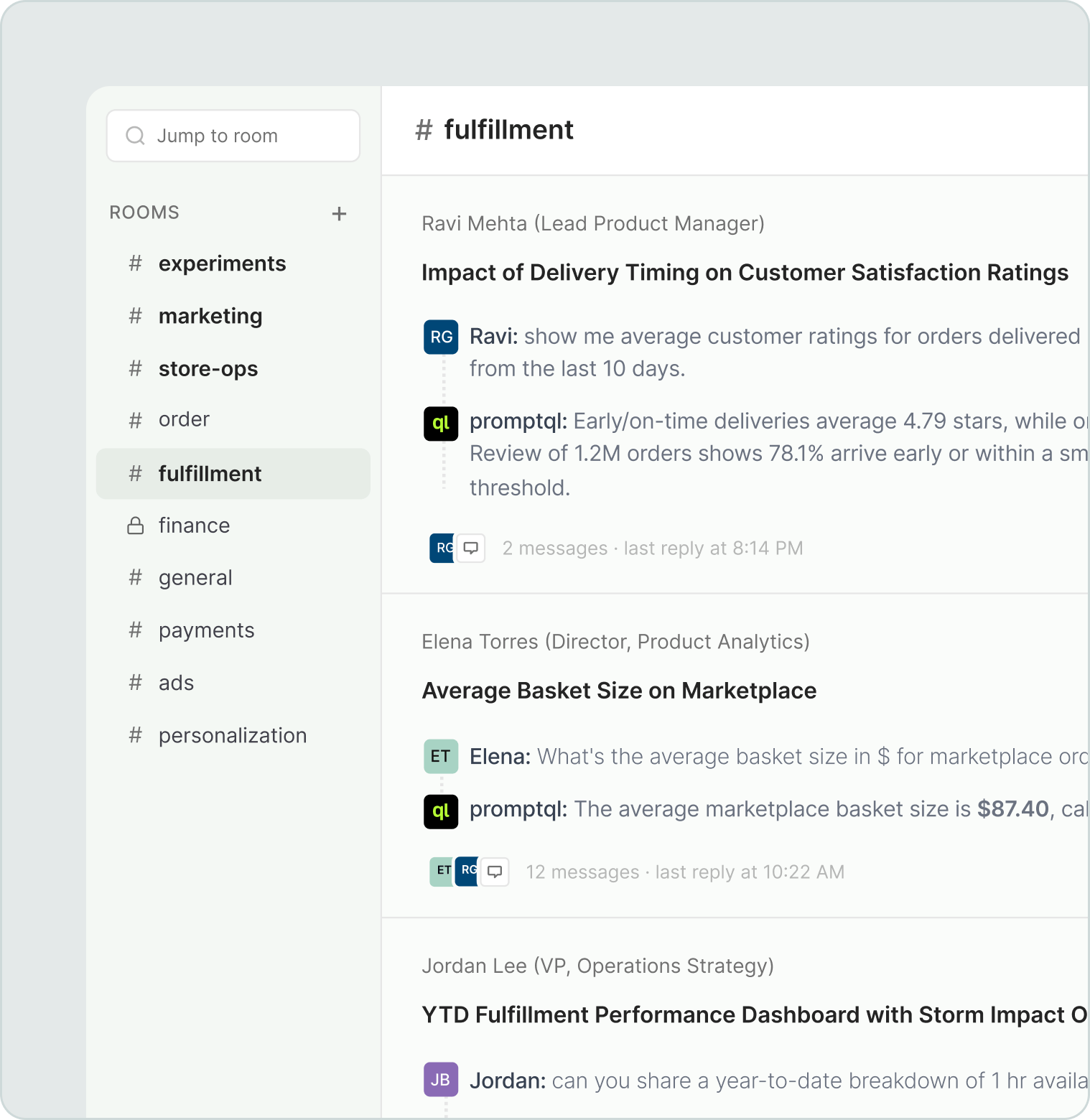

Multiplayer by design

Analysis has always been a team sport. Bring teammates into threads to review, clarify, correct, and refine. PromptQL works like a coworker in the loop — helping explore hypotheses, pressure-test assumptions, and improve shared context.

Teachable in the flow

Rich context lives in everyday conversations. Shift a metric. Note an exception. Flag an edge case. Reset an assumption. PromptQL captures those decision traces and turns them into durable, shared understanding, without adding documentation overhead.

Where ideas compound

Like Slack for your data conversations, PromptQL keeps analysis in open channels and shared threads.

Transparency drives momentum. Learn by exposure. Spark better questions. Build on prior thinking.

Move sensitive analysis into private threads or restricted channels when needed.

Secure from day 0

Designed to meet enterprise security and compliance standards from the get go.

Permissions-aware

PromptQL respects your existing source permissions, including row- and column-level controls. Your access rules are always enforced.

Dedicated environment

Runs in a dedicated environment, connecting directly to your systems and executing generated programs in a sandboxed runtime.

Private by default

Work stays private unless you choose to share it, with explicit controls over what’s visible, to whom, and when.

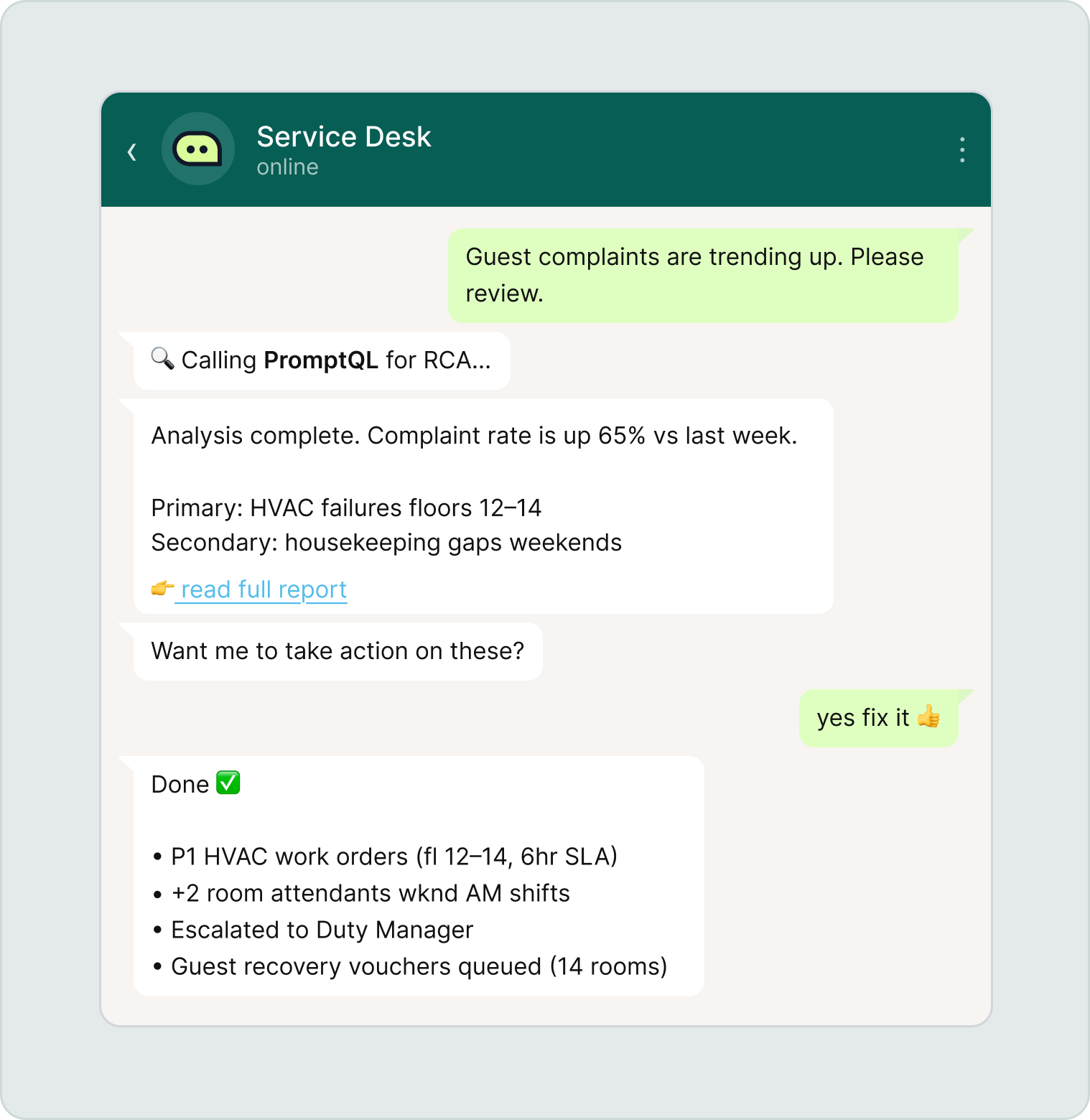

Trusted intelligence, embedded anywhere

INTERNAL TEAMS

Technical, business, ops, and executives get instant, trusted answers to business questions, without waiting on experts.

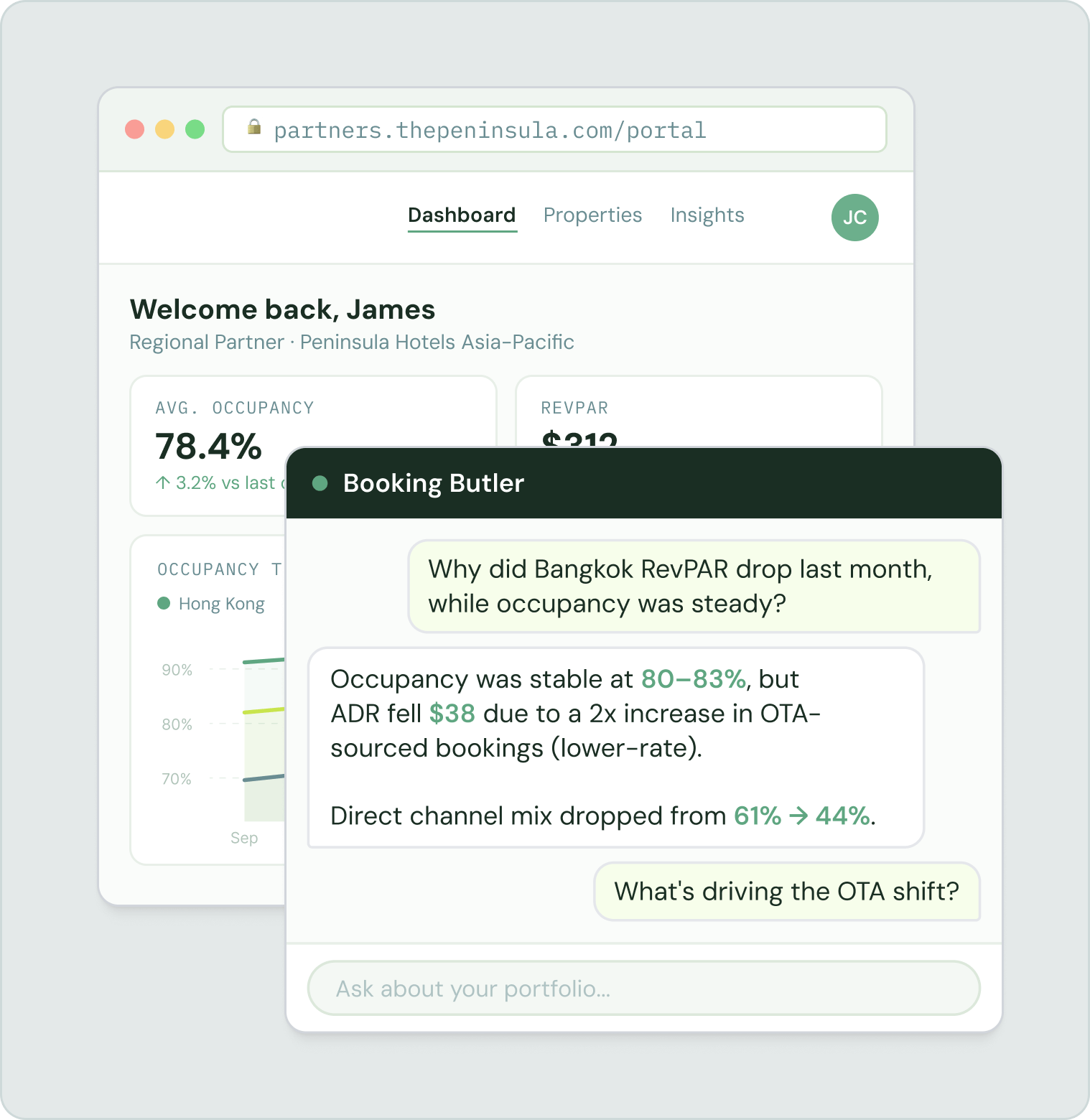

CUSTOMER-FACING PRODUCTS

Embed PromptQL under your brand so customers can explore and understand their own data, right inside your product.

AGENTS & AUTOMATION