19 Sep, 2025

•

5 MIN READ

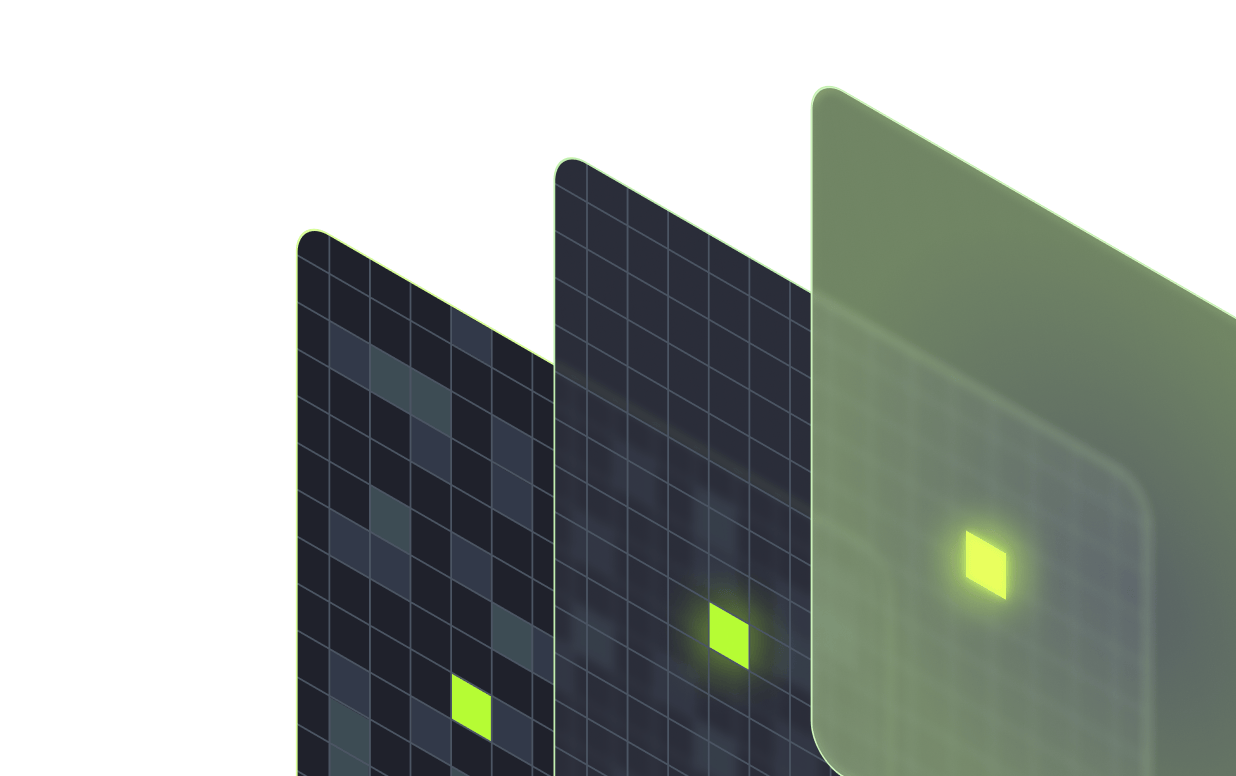

AI Analyst pyramid of needs

- Bottom: factual questions – What happened?

- Middle: understanding and foresight – Why? What’s next?

- Top: guidance and action – What should we do?

- Jump straight to actions without foundations → cool demos that die in pilot.

- Stay at the base → low impact, just another talk-to-dashboard wrapper.

T1: Descriptive – What happened?

- How many T1 orders did we ship in Zone 2 yesterday?

- What was the average abandoned cart size last quarter?

- Which organic products sold best during the holiday sale?

Capabilities for success:

- Semantic foundations

Business concepts and terms (T1, Zone 2, VIP) are encoded for correct and consistent interpretation. - Deterministic execution

Natural language mapped to well-reasoned query plans that are executed programmatically outside the LLM context - Performance & freshness discipline

Answers come quickly (sub-seconds to a few seconds) and reflect data within declared freshness windows. - Explainability & receipts

Every answer includes the value, time window, filters, and links to metric definitions and lineage – executives get “one-line answers with receipts.” - Governance & access control

Enforces existing RBAC, PII masking, etc. rules and keeps immutable audit logs for accountability and compliance. - Observability & guardrails

Telemetry on routing, latency, cost, and data freshness, with circuit breakers to prevent runaway queries or degraded performance. - Self-serve UX

Simple interfaces where users can disambiguate terms and pivot results without needing to wade through SQL.

Impact:

- Self serve answer for the simple stuff

- Reduction in ad‑hoc analysts tickets

- Executives get reliable “one‑line answers with receipts.”

T2: Diagnostic – Why did it happen?

- Why did late returns on LEGO spike in February?

- Why did BOS outperform NYC for the Halloween25-2x campaign?

- Is the recent sales dip in NYC correlated with local weather events?

Capabilities for success:

- Multi-source fusion

Combine structured internal data (sales, returns), external feeds (weather, shipping), and unstructured/semi-structured signals (support tickets, error logs) - Accept RCA runbook

Ability to provide RCA playbooks that capture how your analysts investigate shifts – either ad hoc or baked into the AI for repeatability. - Deeper reasoning & math engines

Analytical core that can decompose changes into ranked drivers, apply confounding controls, and run sensitivity checks so explanations are stable, quantified, and defensible. - Diagnostic UX

Clear outputs executives trust and analysts can rerun: driver narratives, quantified impacts, confidence levels, and reproducible plans (segments, filters, controls).

Impact:

- RCA that used to take hours shrink to minutes → faster actions

- Executives get driver narratives with evidence, not just charts.

- Analysts focus on higher-value validation and design of next steps.

T3: Prescriptive – What should we do?

- In the next 30 days, what’s the optimal sequence of email vs. push campaigns to reactivate customers inactive since summer?”

- What products should get premium shelf space in Austin stores right now given the wet weather?

- During December peak, which shipping options should we feature at checkout to maximize conversion while keeping fulfillment costs inside budget?

Capabilities for success:

- Decision frames & guardrails

Ability to formalize objectives, levers, and constraints (budget, capacity, compliance) so recommendations always land inside safe boundaries. - Reasoning & optimization engines

Core models that estimate uplift, simulate scenarios, and resolve trade-offs across multiple objectives (e.g., conversion vs. cost). Includes sequencing and pacing logic. - Uncertainty & robustness

Every recommendation comes with confidence intervals, sensitivity checks, and cautions so leaders see not just what to do but how reliable it is. - Closed-loop learning

Experimentation and off-policy evaluation to validate recommendations, with feedback loops that update models. - Prescriptive UX & governance

Options presented as ranked, quantified choices with rationale, risks, and required approvals. Every recommendation is reproducible, auditable, and linked to owners.

- Leaders get actionable options.

- Operators save time with ranked choices.

- Decisions are consistent and transparent.

- Everyone benefits from high-quality reasoning that improves over time.

A note on autonomy

- It usually sits outside the analyst/data scientist purview.

Autonomy lives more in the business domain, where leaders decide what kinds of actions are safe and desirable to automate. - Not all actions can be triggered in software.

In many cases, the intervention required is human or analog – a store redesign, a contract renegotiation, a customer call. Those can’t be pushed into a system as a simple trigger.