The RAG Bottleneck: When One Size Fits Nothing

Traditional Retrieval-Augmented Generation (RAG) systems suffer from a fundamental inefficiency that's hiding in plain sight: They treat every query identically, forcing simple questions through the same expensive embedding and vector search pipeline as complex or technical requests.

Throughout this exploration of intent-driven architectures, we'll use a documentation assistant as our primary example. But don't mistake this for a post about documentation systems (though, I do enjoy writing about docs, and you can find some older posts here and here 😉). The architectural principles we're discussing apply to any knowledge-intensive AI application: customer support bots, internal knowledge bases, research assistants, or any system where users ask fundamentally different types of questions that require fundamentally different approaches to answer.

Documentation assistants simply make an ideal case study because they expose the full spectrum of query types in sharp relief. When a developer asks "What is a database connector?" versus "Show me the metadata for configuring JWT authentication," the difference in required processing, validation, and response speed becomes impossible to ignore. These systems handle everything from conceptual explanations to precise technical specifications; this makes them the perfect laboratory for understanding how intent-driven routing can transform performance and accuracy.

This "one-size-fits-all" approach wherein all queries get forced through an embedding pipeline creates three critical problems, which we'll explore below, that hurt performance, reliability, and accuracy.

The Three Critical Problems with Traditional RAG

Problem #1: The "Expensive Operation Tax"

The Issue: Every query pays the full embedding + similarity search cost, regardless of complexity or whether retrieval is actually needed.

Real Impact: Simple questions like "What is a connector?" trigger the same expensive pipeline as complex troubleshooting scenarios. A user asking for a basic definition waits an inordinate amount of time while the system performs vector operations on information the LLM already knows from its training data.

The Waste: Resources are consumed on unnecessary embedding generation, similarity calculations, and content aggregation when a direct model response would be faster and equally accurate.

Performance Cost: This creates a flat performance profile where every interaction feels slow, regardless of query complexity.

Problem #2: The "One Size Fits All" Trap

The Issue: RAG systems don't differentiate between fundamentally different types of user intent, applying the same retrieval-then-generate approach to all queries.

Examples of Mismatched Treatment from our Experience:

- "What is PromptQL?" (needs conceptual explanation from model knowledge)

- "How do I initialize a connector?" (needs exact CLI commands with validation)

- "Show me a model config" (needs validated YAML examples with correct syntax)

The Result: Users get suboptimal responses because the system doesn't understand what type of answer they actually need. Conceptual questions get buried in technical details, while technical questions lack the precision required for implementation.

Problem #3: The "Hallucination Paradox"

The Issue: RAG retrieves semantically similar content but doesn't validate the accuracy of generated responses, creating a false sense of reliability and accuracy.

Specific Problems with Hallucination & Docs:

- CLI commands that don't actually exist - similarity search finds related content, but the generated command syntax is wrong or includes imaginary flags

- Configuration syntax that's close but incorrect - YAML examples that mix different versions, contexts, and imagined syntax

- Examples that combine incompatible elements - configurations that look right but won't work in practice

User Impact: Developers follow plausible-looking but incorrect instructions, leading to broken workflows, debugging sessions, and lost productivity. The retrieved context gives confidence, but the generated response contains subtle errors that cause real problems.

This paradox is particularly dangerous in technical documentation where precision matters - a single incorrect flag or syntax error can block a user's entire workflow.

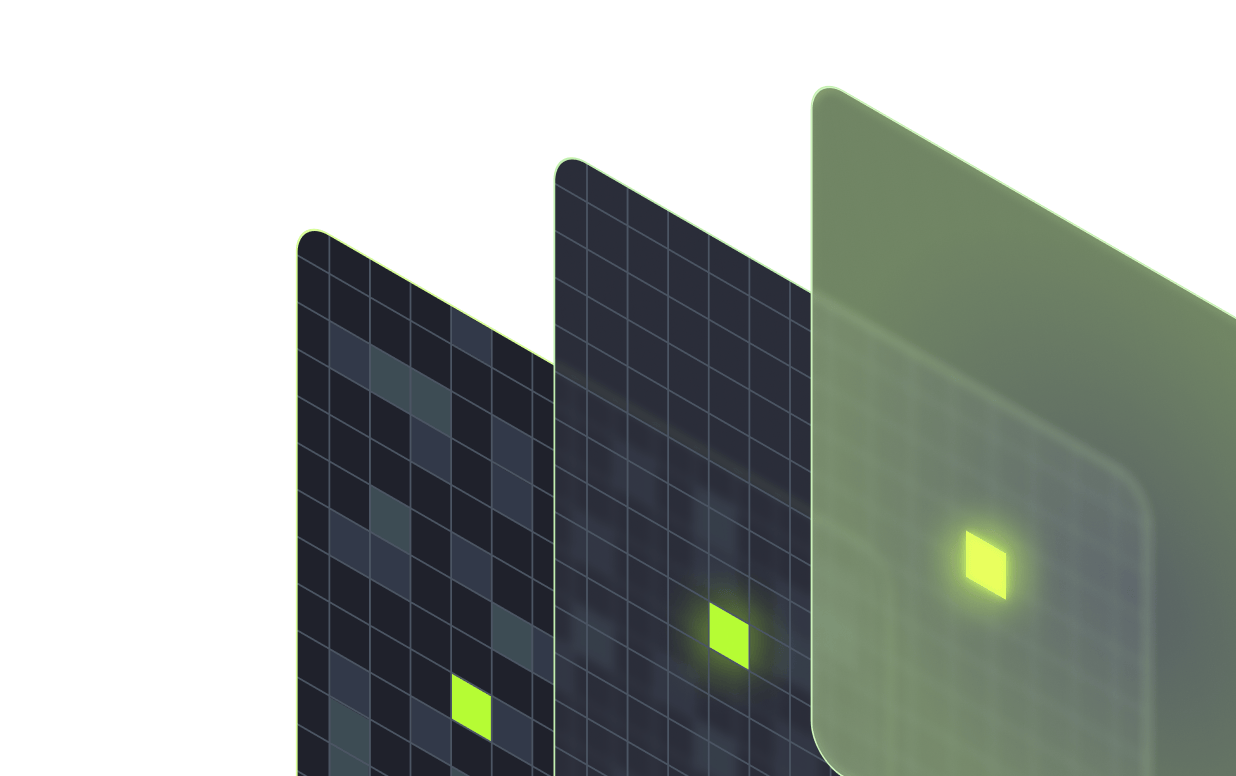

The Intent-Driven Solution: Database Optimization for Unstructured Data

The breakthrough came from applying database query optimization principles to unstructured data retrieval. Just as SQL query planners choose between index scans and table scans based on query complexity, intent-driven systems use intelligent classification to route queries through optimal response pathways.

The Four-Category Framework

General Information When users ask "What is a connector?" or "How does authentication work?", they need conceptual explanations that any modern LLM can provide from its training data. These queries should be recognized and answered directly in under a second, completely bypassing expensive vector operations.

Guide Requests Questions like "How do I authenticate?" or "Walk me through the setup process" signal that users need step-by-step instructions with specific commands. Here, accuracy is critical; a wrong API endpoint or request shape can break everything. These queries should trigger targeted validation protocols that verify each instruction exists in the documentation before responding.

Example Requests When users say "Show me a complete request" or "What does the configuration look like?", they're requesting concrete examples with correct syntax. Traditional RAG often hallucinates plausible-looking-but-incorrect JSON or YAML configurations. The intent-driven approach validates examples against actual documentation, ensuring users get working configurations.

Troubleshooting Complex queries like "My authentication is failing" or detailed error scenarios genuinely need a comprehensive documentation search. For these cases, the full RAG pipeline should be maintained but applied selectively, ensuring complex problems get thorough treatment while simple questions aren't slowed down.

The Performance Impact

This architectural approach delivers dramatic improvements:

- 26x to 90x faster responses for simple conceptual questions (0.5 seconds vs. 13-45 seconds)

- 1.9x to 4.5x improvement for complex queries requiring validation (10-20 seconds vs. 13-45 seconds)

- 75% reduction in unnecessary vector operations across all query types

- Near-zero hallucination for technical commands and examples through targeted validation

- ~70% overall response time improvement while maintaining higher accuracy

NB: These numbers are based on comparing an embedding-first pipeline with the four-category framework identified above. In our follow-up post, we’ll share more details of how you can build this, too. For now, check out the abstract steps below 👇

Moving Beyond Basic RAG: Implementation Strategy

Step 1: Audit Your Query Patterns

Analyze how users actually interact with your system. Categorize incoming questions by complexity and intent — you'll likely find that 50-70% of queries are simple conceptual questions that don't need retrieval at all. Map expensive operations to the actual value provided.

Step 2: Implement Intent Classification

Build a fast classification system that routes queries before they hit expensive operations. Start with pattern matching for common question types ("what is", "how do I", "show me an example"), then refine based on your domain. Make this classification lightweight and scalable.

Step 3: Build Validation Protocols

For queries needing technical accuracy, create exact validation mechanisms rather than relying on similarity search alone. Design verification protocols specific to your domain, whether API endpoints, database schemas, or command syntax. Query authoritative sources directly to confirm details exist before generating responses.

Step 4: Optimize Response Patterns

Create query-specific response protocols that match user intent. Structure validation logic for better performance. Monitor across query categories to identify optimization opportunities and ensure routing logic matches actual user patterns.

The Post-RAG Era

Traditional RAG is like running SELECT * on every database query. The intent-driven approach shows how to apply proper query optimization: using targeted queries that fetch only what's needed, avoiding expensive operations when simpler ones suffice, and validating results where accuracy matters.

This represents more than just an optimization technique. It's a fundamental shift from treating all queries as retrieval problems to understanding them as intent problems. SQL evolved from simple queries to sophisticated query planning that chooses optimal execution paths; LLM applications working with mixes of structured and unstructured data need similar intelligent routing to scale effectively