•

The semantic layer is dead. Long live the wiki.

Most “AI on data” programs are just semantic-layer maximalism with a new paint job: if we can finally standardize meaning, the model will finally reason accurately.

It won’t. A perfect semantic layer is neither sufficient nor operable. The bottleneck is organizational semantics at runtime, not SQL.

- Semantic layer is information-poor

- Semantic layer operating model is flawed

- The precedent: Wikipedia

- Wiki implicitly contains a semantic layer

- Organization brain with octopus arms

The semantic layer is information-poor for AI

Semantic layers are designed as a governed interface for humans to retrieve numbers, not to inhabit meaning. They capture the clean, declarative surface area of truth and omit the messy parts that determine whether an answer is correct.

The majority of what makes metrics usable is missing:

Meaning is contextual, not global. The same metric name can legitimately refer to different implementations across product lines, geos, channels, or lifecycle stages. A semantic layer encourages flattening this into one “definition” under the guise of governance.

Definitions are political and time-bound. Ownership sits with the business, priorities shift quarterly, and what “counts” changes accordingly. Static interfaces pretend meaning is stable when it’s negotiated.

Operational intelligence is non-declarative. “How do I interpret this?” is rarely answered by a formula. You need causal folk models, known anomaly patterns, failure modes, and the “if X then check Y” heuristics that live in experienced operators.

Temporal continuity matters. Most metrics have version histories, instrumentation changes, backfills, silent breaks, and embarrassing periods everyone learned to discount. That context is mostly narrative, not schema.

Relationships are about trade-offs, not joins. Real decision-making depends on tensions (optimize A, damage B), guardrails, and strategic intent. Semantic layers describe entities; they don’t encode the organization’s trade-off graph.

Stakeholder meaning diverges by role. “Revenue” for the CFO and “revenue” for the Sales VP are not the same question even when the number is the same. A single canonical definition is a UI convenience masquerading as epistemology.

“Now what?” is the point. An AI that returns a metric without the response protocol is doing the easy half. Runbooks and escalation paths are part of meaning.

A semantic layer can be perfect and still produce garbage decisions because it’s optimized for consistency over situated correctness.

The semantic layer operating model is structurally wrong

Even if you wanted to cram the missing knowledge into a semantic layer, the maintenance model collapses under its own sociology.

Centralized semantic layers are planned cities: beautiful in the blueprint, empty in practice. Semantics evolve at the edges, among the people who ship, sell, operate, and get paged. The center cannot keep up, and it cannot compel truth.

In hindsight, failure modes are predictable:

The experts who can write it won’t. Deep metric knowledge is held by high-leverage operators; their marginal hour is never best spent authoring centralized documentation that mostly helps strangers.

Central teams are paced by infrastructure, not decisions. The business iterates weekly; the data platform iterates on quarters. Any interface maintained on platform cadence becomes stale, then distrusted, then ignored.

Tight coupling blocks shipping. If semantic context lives “in the data layer,” every AI feature becomes dependent on an always-incomplete canonicalization project. Data is never “done,” so AI velocity becomes permanently hostage.

Incentives are inverted. Producers don’t benefit from documenting; consumers can’t document correctly; owners of context are neither staffed nor accountable for semantic hygiene in a centralized model.

Cold-start kills adoption. Semantic layers only feel useful after critical mass; reaching critical mass requires upfront drudgery with delayed payoff. Organizations don’t fail because they’re lazy; they fail because the payoff horizon is mispriced.

So you get shadow metrics, spreadsheet truth, ad hoc definitions, and a semantic layer that exists primarily as a compliance artifact.

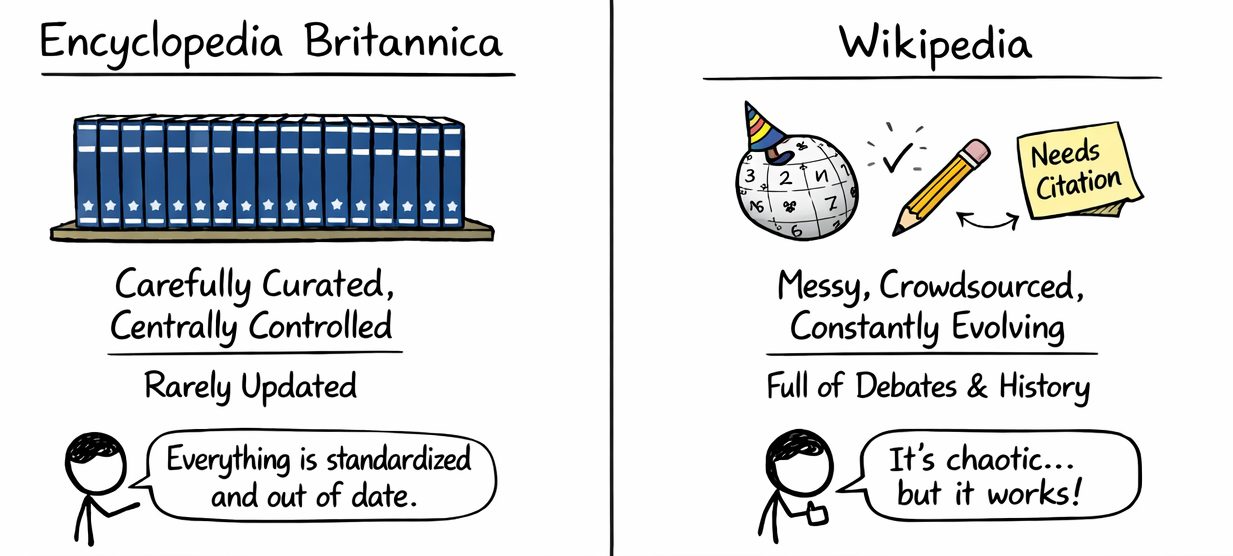

Wikipedia already solved the scaling problem

We know what system reliably captures messy, contested knowledge at global scale without centrally curated ontologies - a wiki.

Wikipedia works because it’s a sociotechnical pattern that fits reality:

- contribution is granular, continuous, and opportunistic

- disagreement is expected and recorded

- history is first-class

- coverage emerges from use, not mandates

- accuracy improves via collaborative iteration, not certification

Your organization’s meaning is closer to Wikipedia than to Encyclopedia Britannica. Treat it that way.

The alternative: a wiki that implicitly contains the semantic layer

Stop trying to make the semantic layer the source of meaning. Make it a compiled artifact derived from a living knowledge substrate.

Build an internal wiki where operators and business analysts can capture what they already know. Then put the wiki in the loop with AI. The key point here is not documentation for the sake of documentation; it's feedback coupling.

This creates a virtuous cycle: usage drives coverage → coverage improves AI → improved AI increases usage → and the knowledge base converges because it is continuously exercised under real demand.

We can still have governed models, dimensional abstractions, and metric layers. But they should be downstream, being generated or validated against the wiki, instead of being treated as the place meaning lives.

This inversion matters because it reframes the work from “build the perfect semantic layer” to “capture organizational meaning where it actually exists, then compile interfaces from it.”

The organizational brain, with octopus arms

The wiki becomes the organization’s brain: a living memory of intent, interpretation, exceptions, and procedures. AI becomes the octopus arms: executing queries, generating analyses, navigating ambiguity, and feeding new information back into the brain.

If your AI on data initiative isn't gaining adoption, it’s probably not because your semantic layer is imperfect. It’s because you’re trying to encode a living, political, temporal, role-dependent system of meaning into a centralized, static interface.

Stop.

Build the wiki.